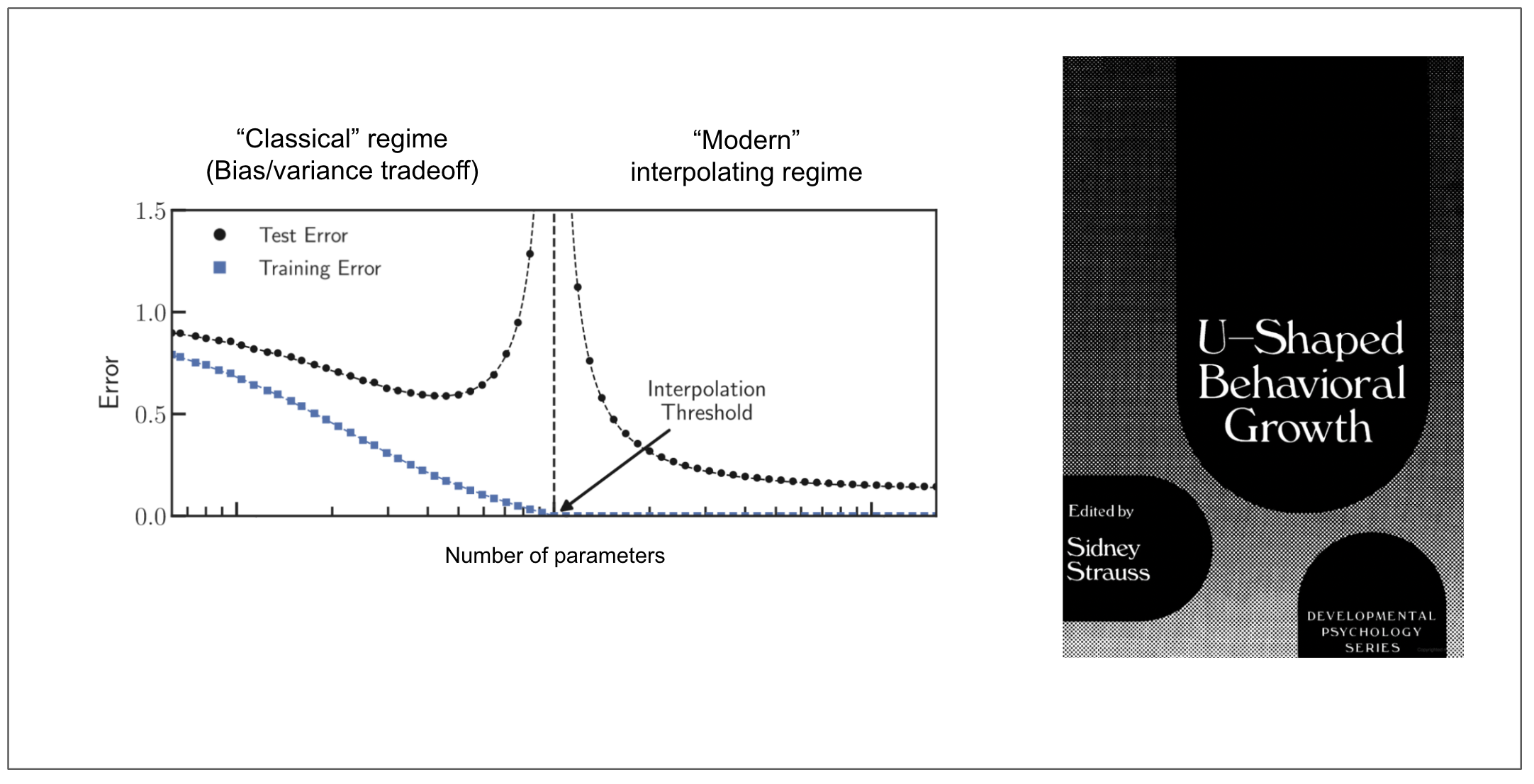

Josh BeckmanThe way double descent is normally presented, increasing the number of model parameters can make performance worse before it gets better. But there is another even more shocking phenomenon called data double descent, where increasing the number of training samples can cause performance to get worse before it gets better. These two phenomena are essentially mirror images of each other. That’s because the explosion in test error depends on the ratio of parameters to training samples.

Comment via email